Week6 Note

Lecture 7: Synchronization(同步化)

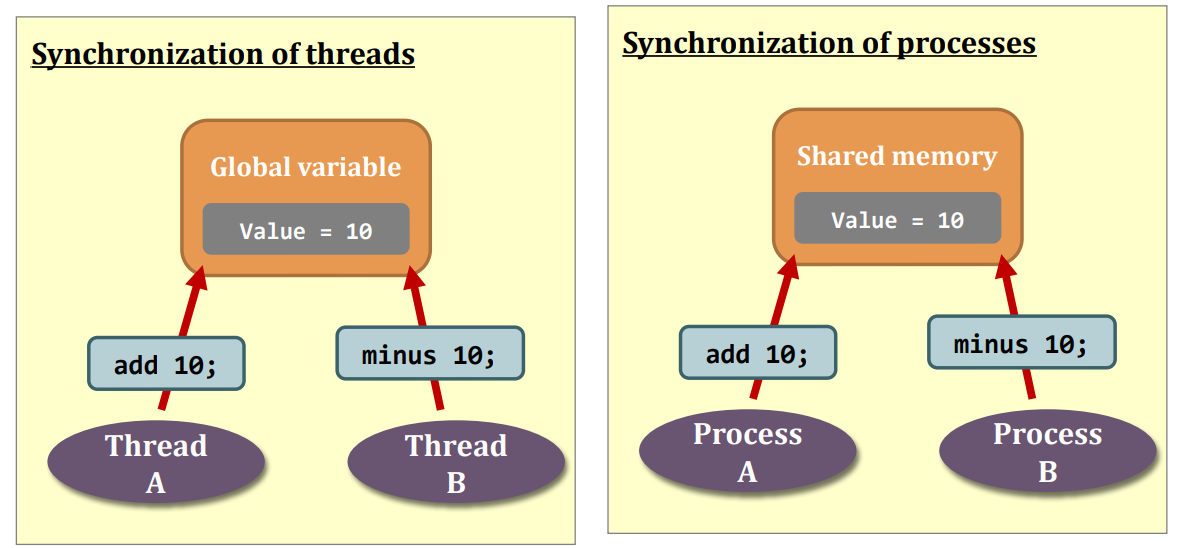

Synchronization of threads/processes

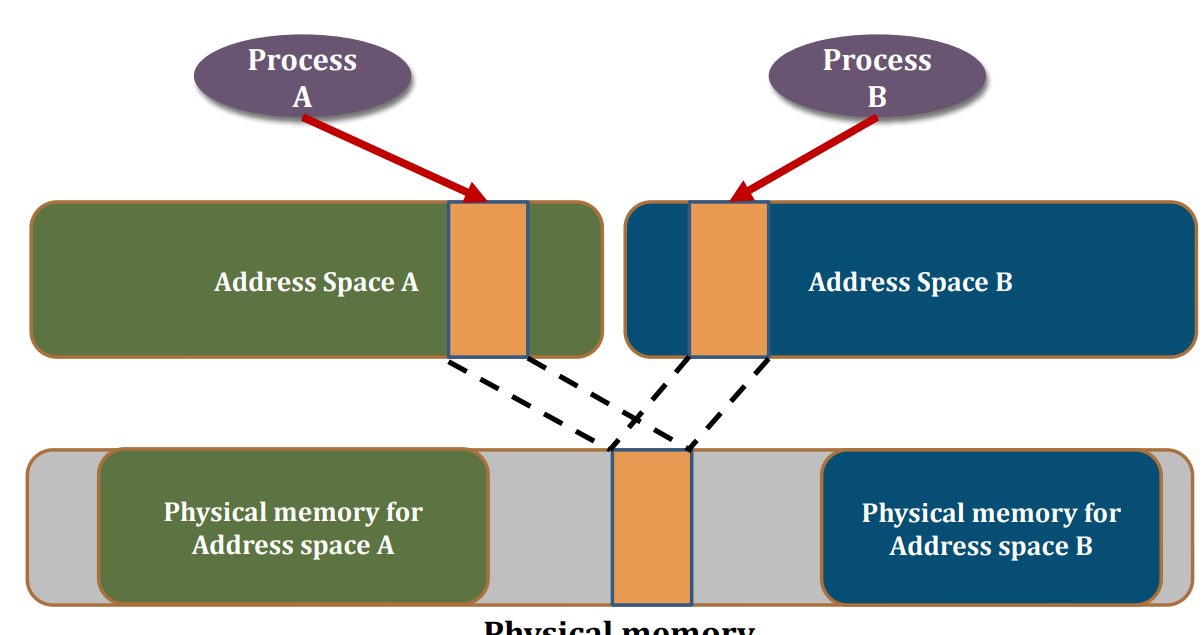

Shared memory between processes

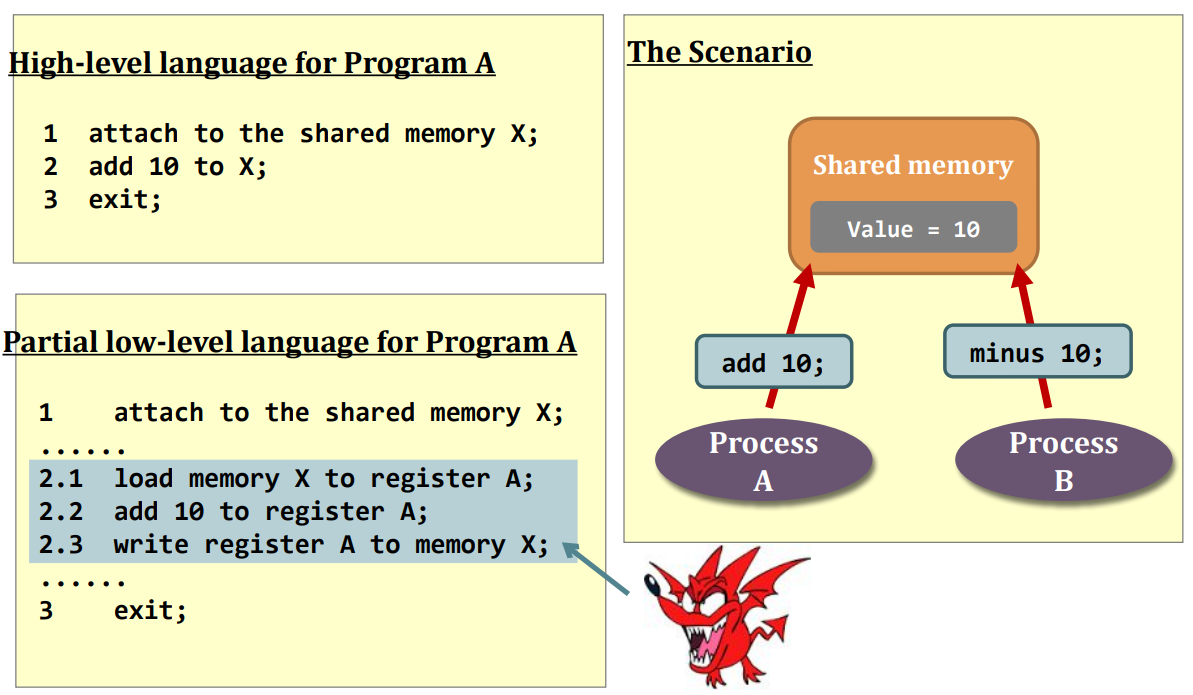

Race Condition: Understanding the problem

Race Condition

Normal execution and Abnormal execution

Normal execution

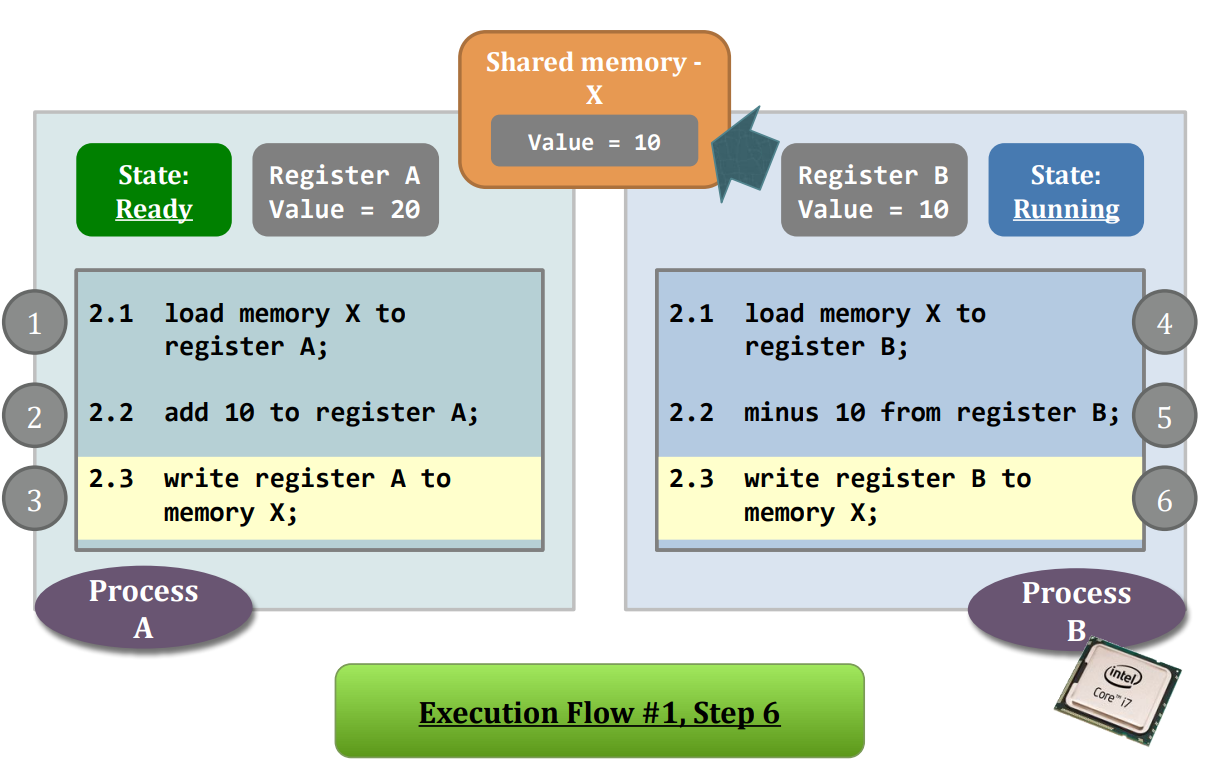

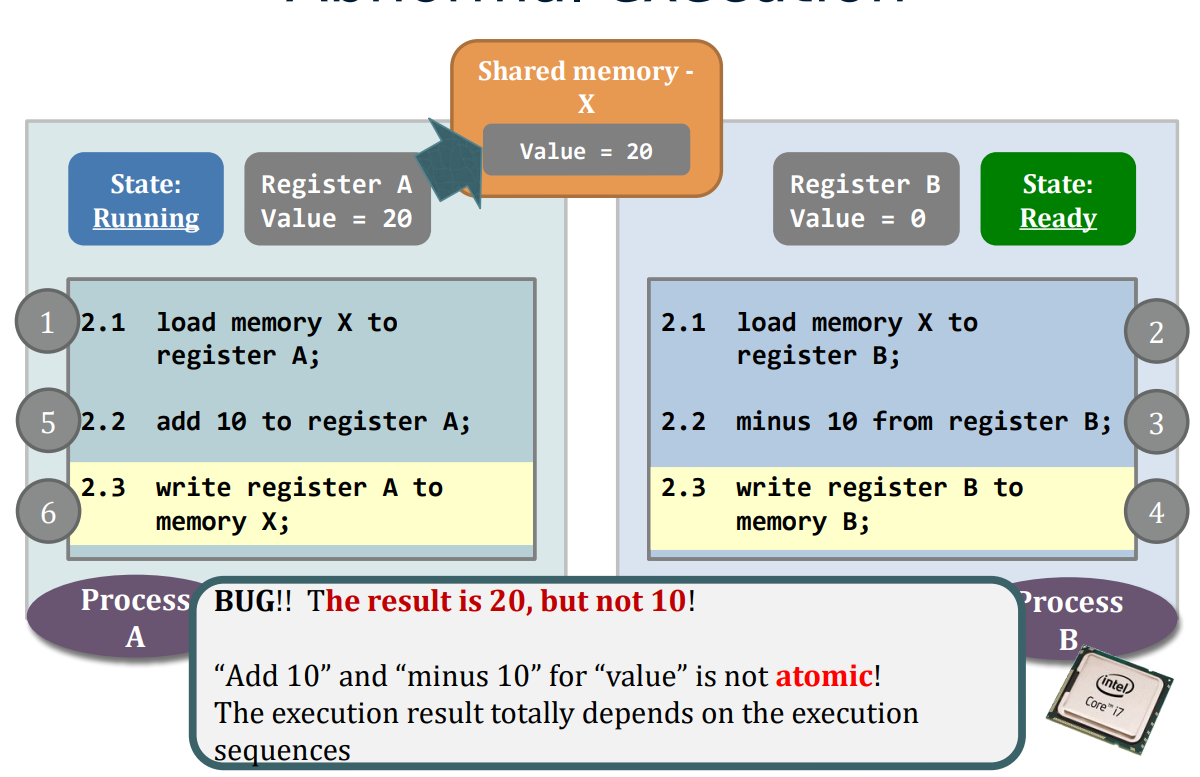

Abnormal execution

- The above scenario is called the race condition. May happen whenever shared object + multiple processes/threads + concurrently

- A race condition means +the outcome of an execution depends on a particular order in which the shared resource is accessed.

- Remember: race condition is always a bad thing and debugging race condition is a nightmare!

- It may end up …

- 99% of the executions are fine.

- 1% of the executions are problematic.

- It may end up …

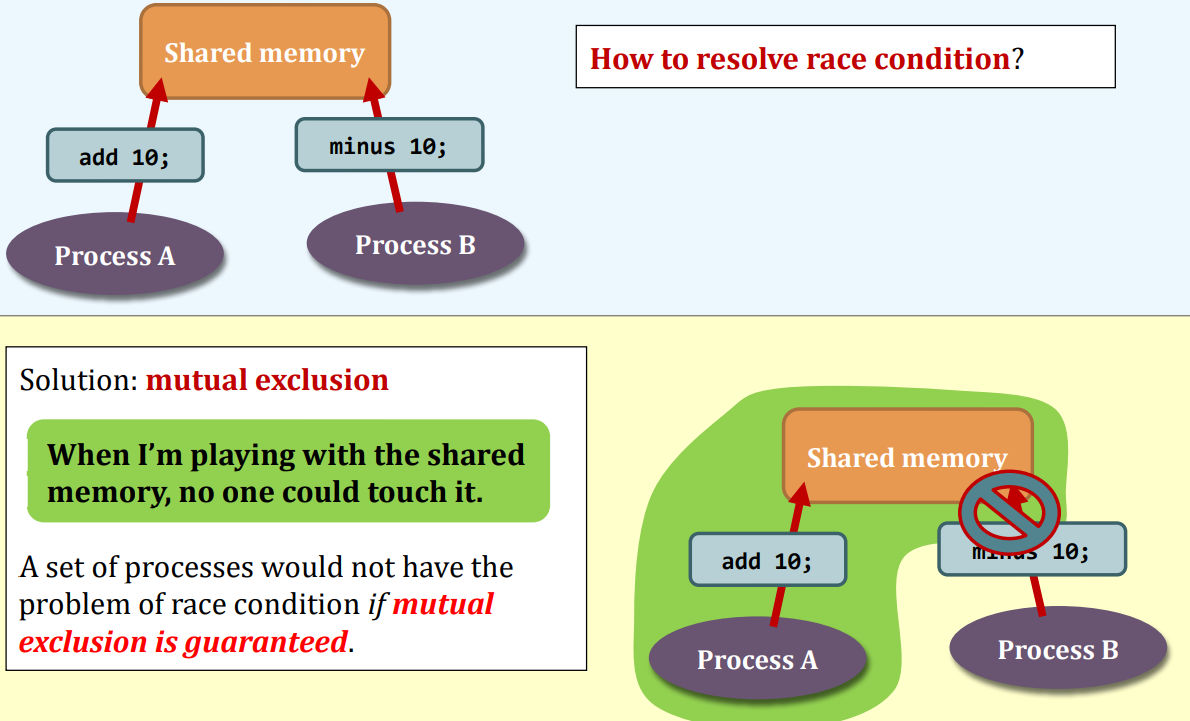

Mutual Exclusion – the cure

Solution - Mutual exclusion

- Shared object is still sharable, but

- Do not access the “shared object” at the same time

- Access the “shared object” one by one

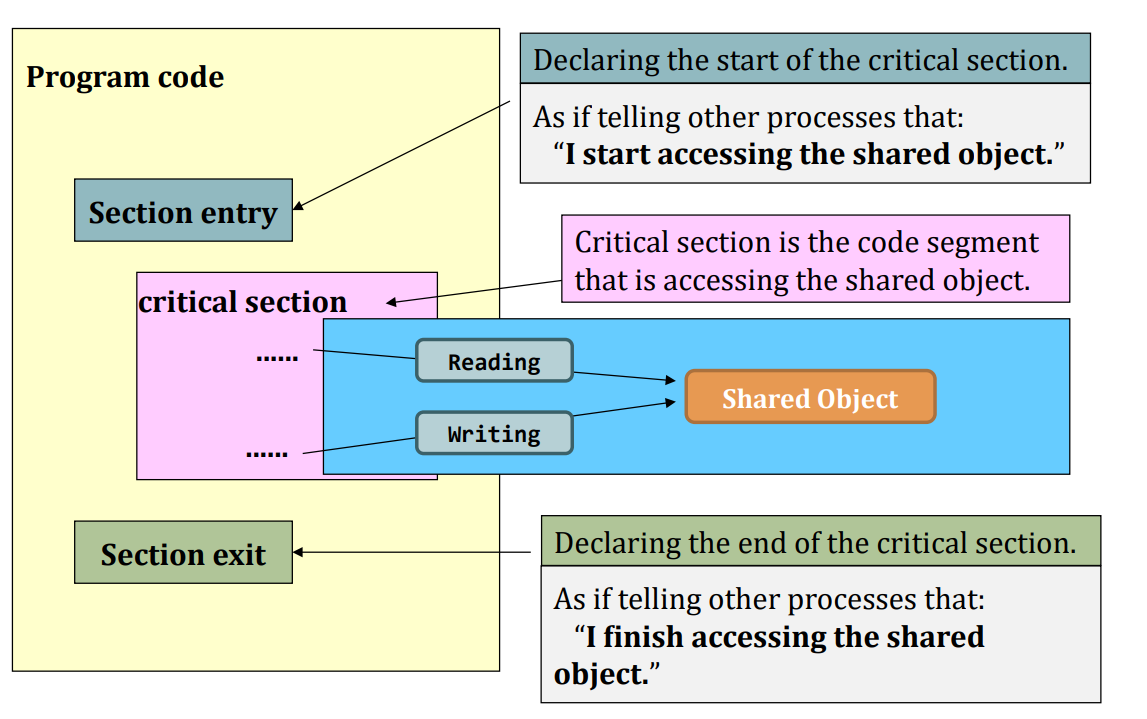

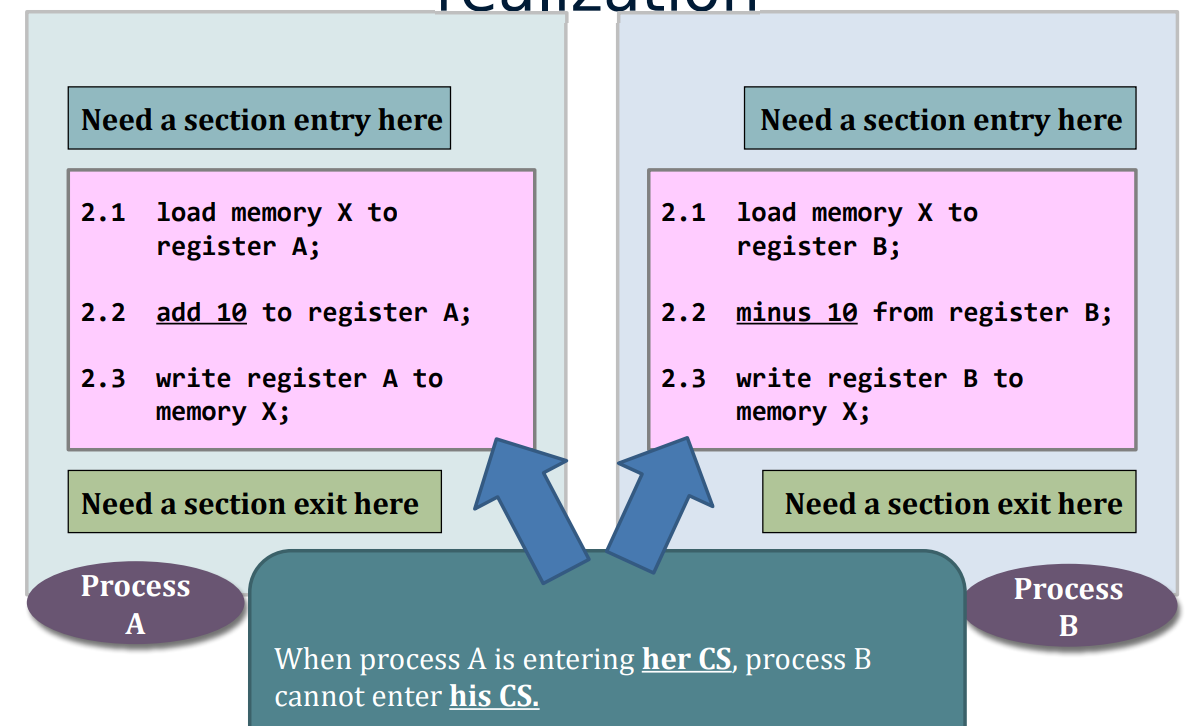

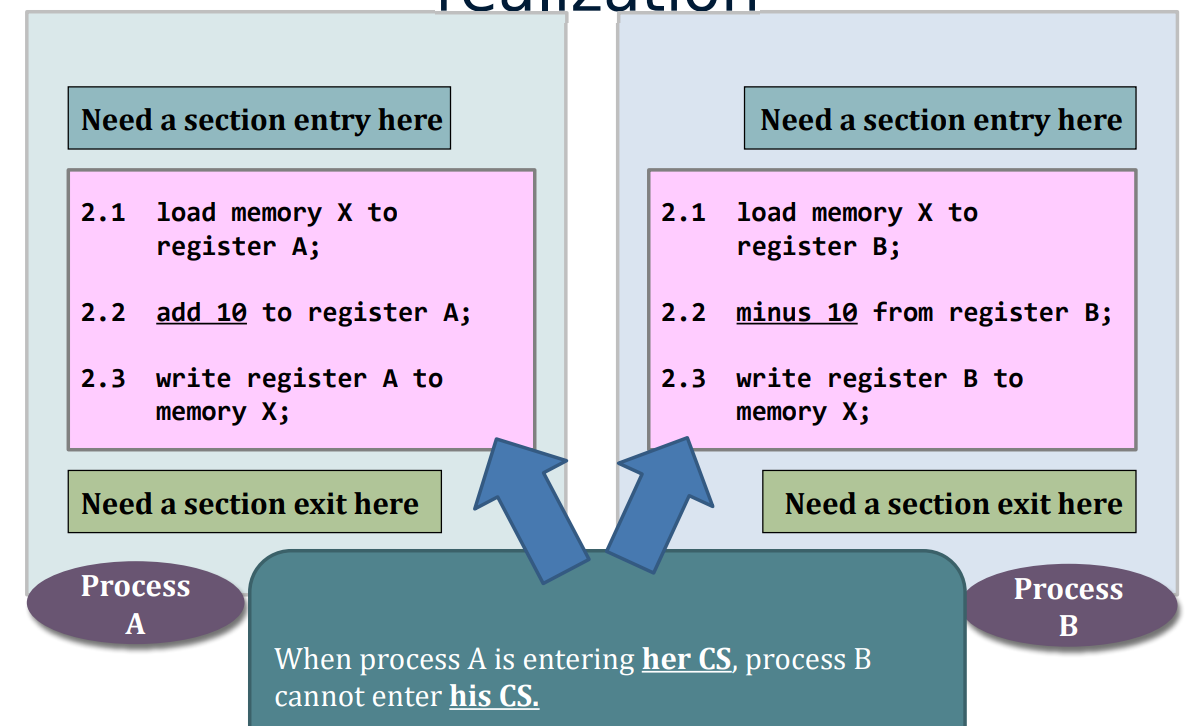

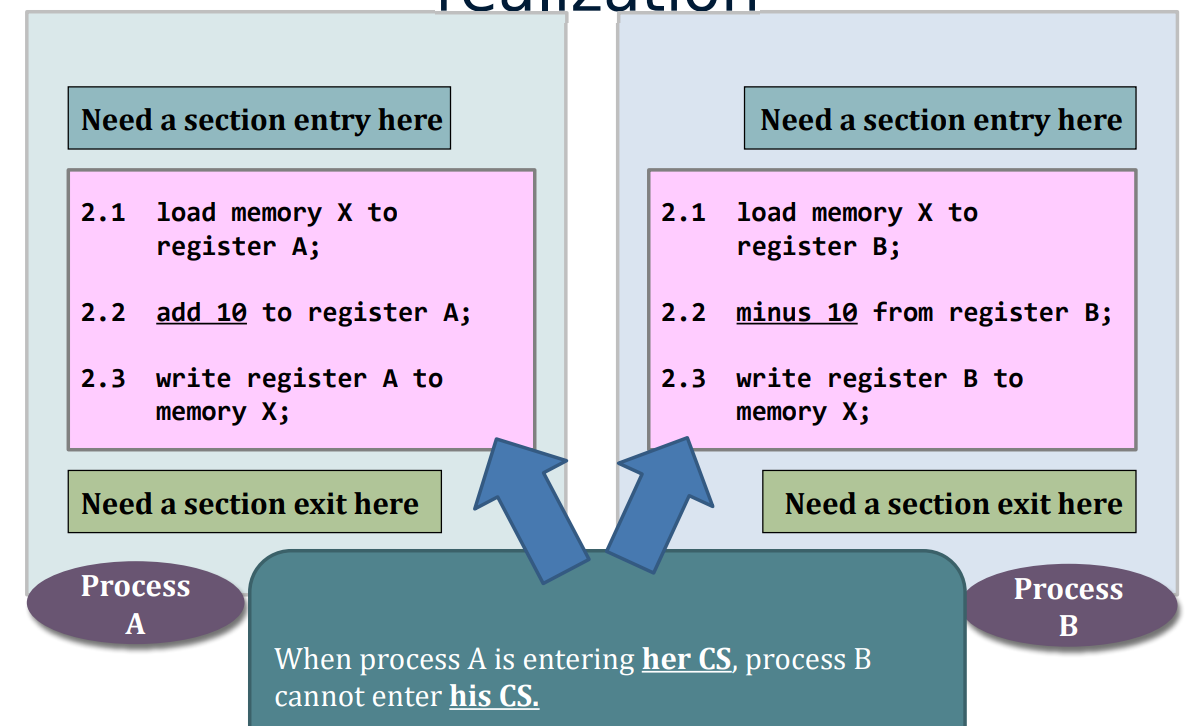

Critical Section - the realization

- Critical section is the code segment that is accessing the shared object

Critical Section (CS) – the realization

A typical mutual exclusion scenario

A typical mutual exclusion scenario

Summary

- Race condition

- Happens when programs accessing a shared object

- The outcome of the computation totally depends on the execution sequences of the processes involved

- Mutual exclusion is a requirement

- If it could be achieved, then the problem of the race condition would be gone.

- A critical section is the code segment that access shared objects.

- Critical section should be as tight as possible>

- Well, you can set the entire code of a program to be a big critical section

- But, the program will have a very high chance to block other processes or to be blocked by other processes

- Critical section should be as tight as possible>

- Note that *one critical section can be designed for accessing more than one shared objects**.

- Implementing section entry and exit is a challenge.

- The entry and the exit are the core parts that guarantee mutual exclusion

- Unless they are correctly implemented, race condition would appear.

- Mutual exclusion hinders(阻碍) the performance of parallel computations.

Entry and exit implementation - requirements

- Requirement #1. Mutual Exclusion

- No two processes could be simultaneously go inside their own critical sections.

- Requirement #2. Bounded Waiting

- Once a process starts trying to enter her CS, there is a bound on the number of times other processes can enter theirs.

- Requirement #3. Progress

- Say no process currently in C.S.

- One of the processes trying to enter will eventually get in.

#0 – disabling interrupt for the whole CS

- Aim

- To disable context switching when the process is inside the critical section.

- Effect

- When a process is in its critical section, no other processes could be able to run

- Correctness?

- Uni-core: Correct but not permissible

- at userspace: what if one writes a CS that loops infinitely and the other process(e.g., the shell) never gets the context switch back to kill it.

- Multi-core:Incorrect

- If there is another core modifying the shared object in the memory(unless you disable interrupts on all cores)

- Uni-core: Correct but not permissible

Achieving Mutual Exclusion

- Lock-based

- Use yet another shared objects: locks

- What about race condition on lock?

- Atomic instructions: instructions that cannot be "interrupted"

- Spin-based lock

- Process synchronization

- Basic spinning using 1 shared variable

- Peterson's solution: Spin using 2 shared variables

- Thread synchronization

pthread_spin_lock

- Process synchronization

- Sleep-based lock

- Process synchronization

- POSIX semaphore(信号标,旗语)

- Thread synchronization

pthread_mutex_lock

- Process synchronization

- Use yet another shared objects: locks

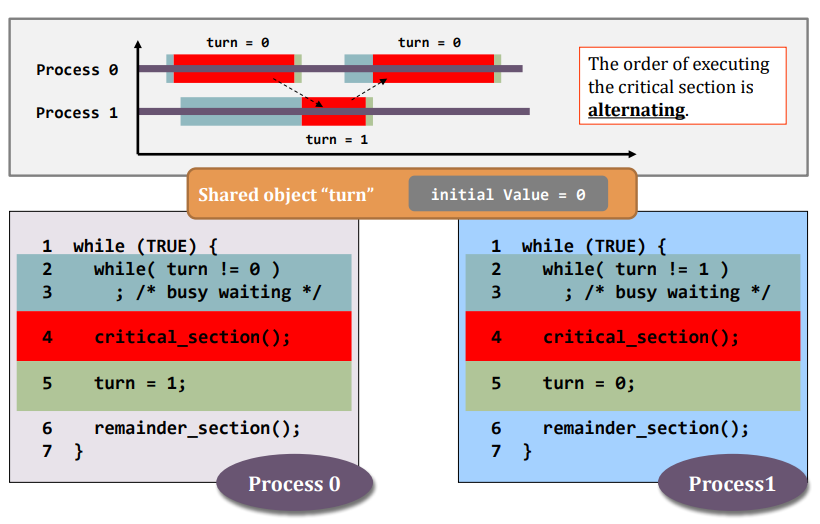

#1: Basic Spin lock(busy waiting)

- Idea.

- Loop on another shared object, turn, to detect the status of other process

- Correct

- but it wastes CPU resources

- OK for short waiting

- Especially these days we have multi-core

- Will not block other irrelevant processes a lot

- OK when spin-time < context-switch-overhead

- Especially these days we have multi-core

- Impose a strict alternation(交替) other

- SOmetimes you give me my turn but I'm not ready to enter CS yet

- Then you have to wait long

- SOmetimes you give me my turn but I'm not ready to enter CS yet

#1: Basic Spin lock violates progress

- Consider the following sequence:

- Process0 leaves cs(), set turn=1

- Process enter cs(), leaves cs()

- set turn=0, work on

remainder_section_slow()

- set turn=0, work on

- Process 0 loops back and enter cs() again, leaves cs(), set turn=1

- Process 0 finishes its

remainder_section(), go back to top of the loop- It can't enter its cs(), as turn=1

- That is process0 gets blocked, but Process 1 is outside its cs(), it is at its

remainder_section_slow()

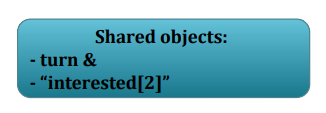

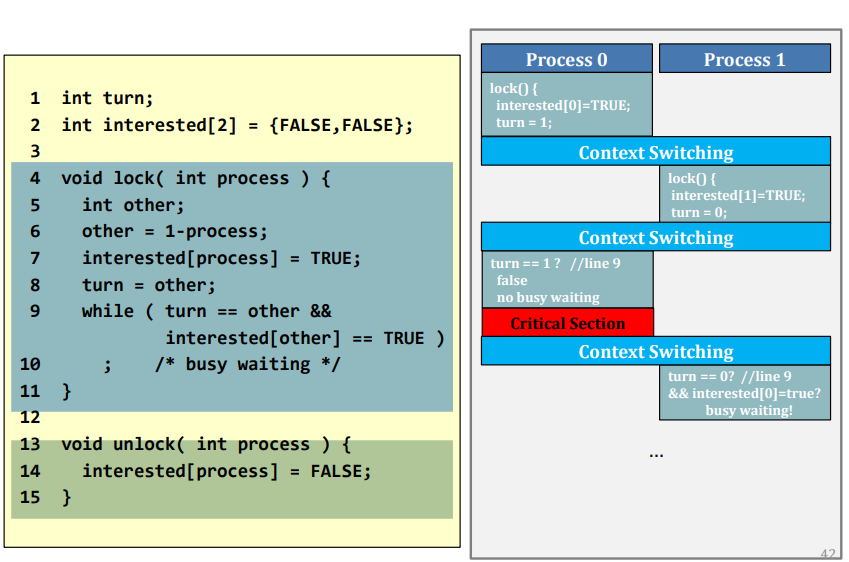

#2: Spin Smarter(by Peterson's solution)

- Highlight:

- Use one more extra shared object: interested

- If I am not interested

- I let you go

- If we are both interested

- Takes turns

- If I am not interested

- Use one more extra shared object: interested

1 | int turn; /* who is last enter cs */ |

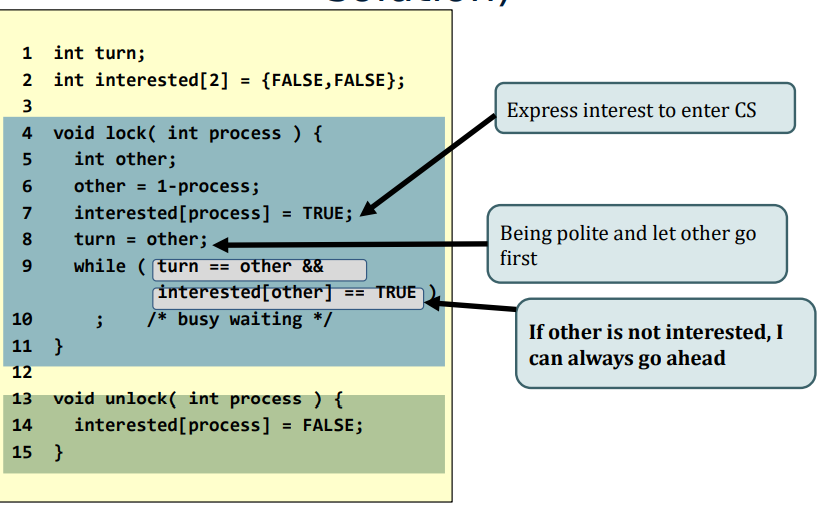

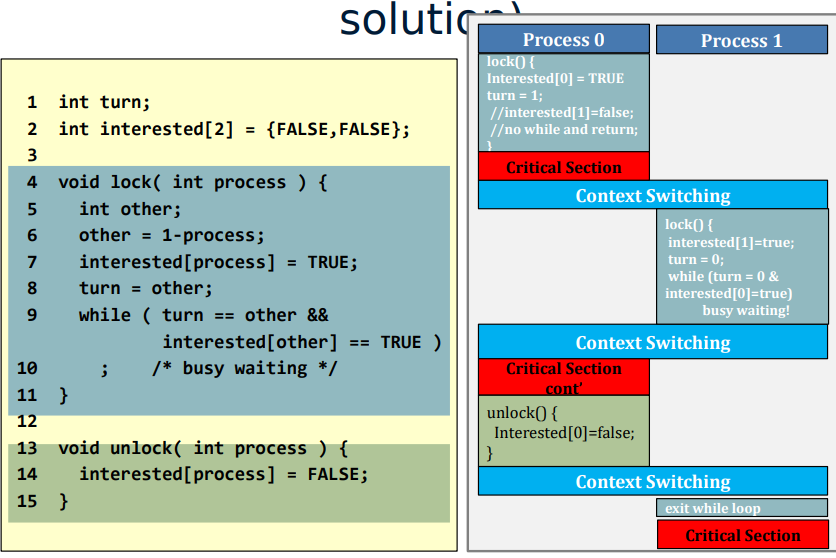

Case 1

Case 2

Spin Smarter (by Peterson’s solution)

- Busy waiting

- shared variable turn for mutual exclusion

- shared variables interest to resolve strict alternation

- Peterson’s solution satisfies all three criteria! (Why?) > “It satisfies the three essential criteria to solve the critical section problem, provided that changes to the variables turn, interest[0], and interest[1] propagate immediately and atomically.”---wikipedia

- Suffer from priority inversion problem

Peterson’s solution satisfies three criteria

- Mutual exclusion

- interested[0] == interested[1] == true

- turn == 0 or turn == 1, not both

- Progress

- If only P0 to enter critical section

- interested[1] == false, thus P0 enters critical section

- If both P0 and P1 to enter critical section

- interested[0] == interested[1] == true and (turn == 0 or turn == 1)

- One of P0 and P1 will be selected

- If only P0 to enter critical section

- Bounded-waiting

- If both P0 and P1 to enter critical section, and P1 selected first

- When P1 exit, interested[1] = false

- If P0 runs fast: interested[1] == false, P0 enters critical section

- If P1 runs fast: interested[1] = true, but turn = 0, P0 enters critical section

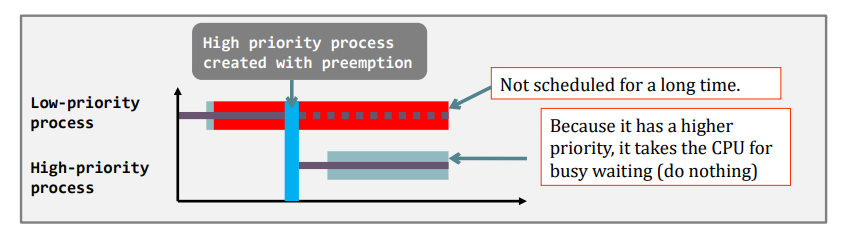

Peterson spinlock suffers from Priority Inversion

- Priority/Preemptive Scheduling (Linux, Windows… all OS..)

- A low priority process L is inside the critical region, but …

- A high priority process H gets the CPU and wants to enter the critical region.

- But H can not lock (because L has not unlock)

- So, H gets the CPU to do nothing but spinning

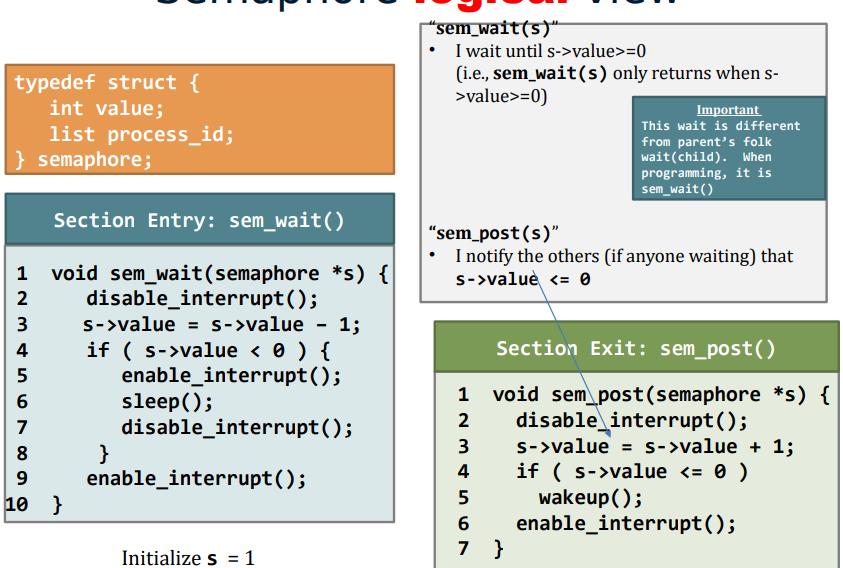

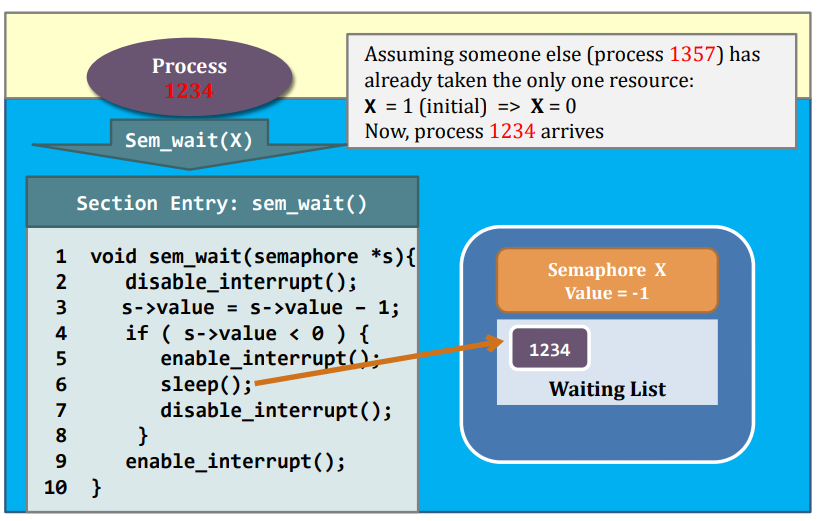

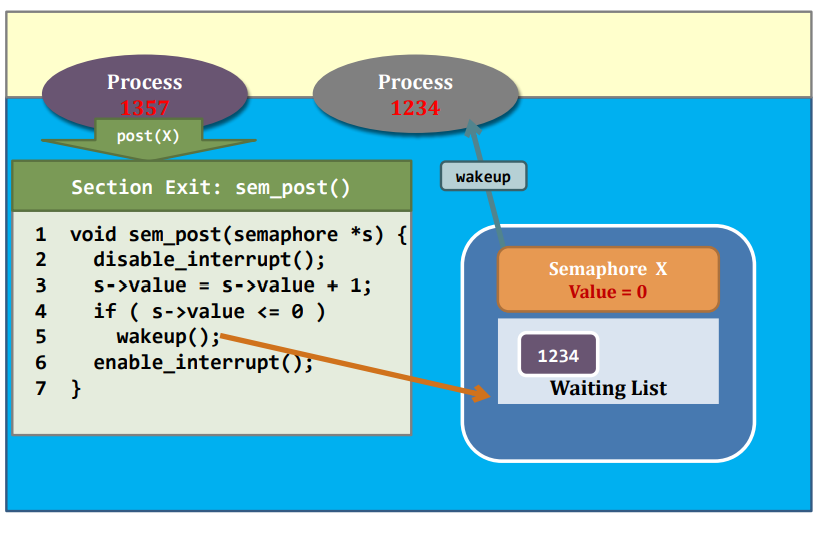

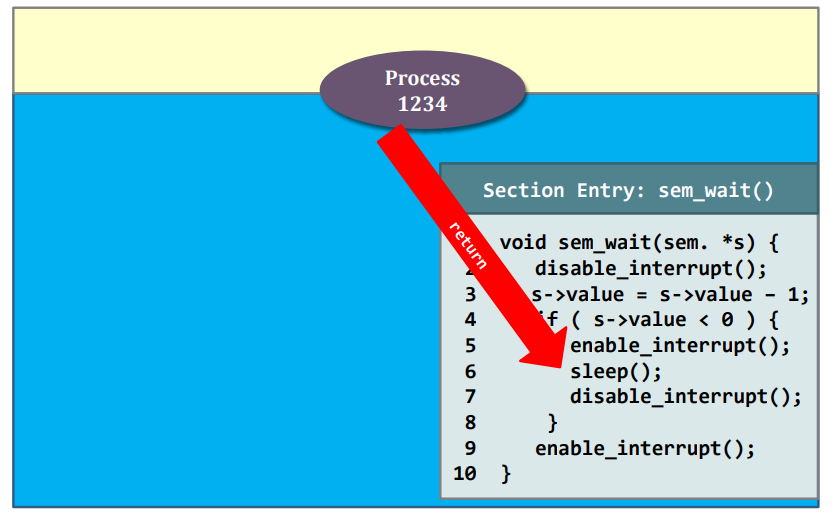

#3: Sleep-based lock: Semaphore

- Semaphore is just a struct, which includes

- an integer that counts the # of resources available

- Can do more than solving mutual exclusion

- a wait-list

- an integer that counts the # of resources available

- The trick is still the section entry/exit function implementation

- Need to interact with scheduler (must involve kernel, e.g., syscall)

- Implement uninterruptable section entry/exit

- Section entry/exit function are short

- Compared with Implementation #0 (uninterruptable throughout the whole CS)

- Section entry/exit function are short

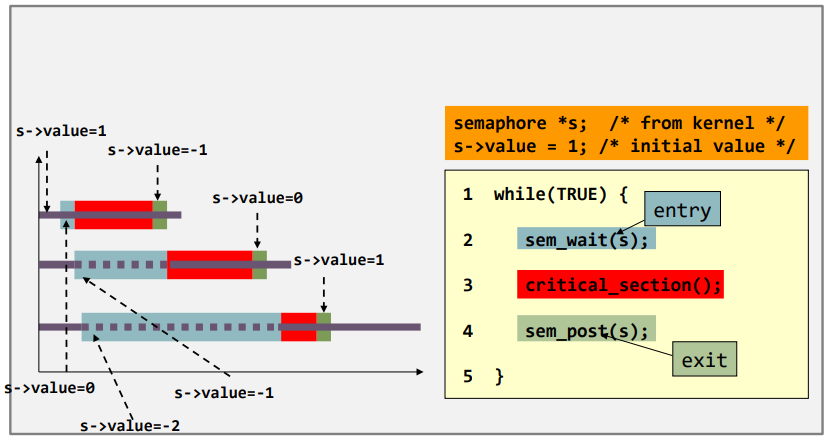

Semaphore logical view

Using Semaphore(User-level)

Using Semaphore beyond mutual exclusion

| Problem | Properties |

|---|---|

| Producer-Consumer Problem | Two classes of processes: producer and consumer;At least one producer and one consumer.[Single-Object Synchronization] |

| Dining Philosopher Problem | They are all running the same program;At least two processes.[Multi-Object Synchronization] |

| Reader Writer Problem | Multiple reads, 1 write |

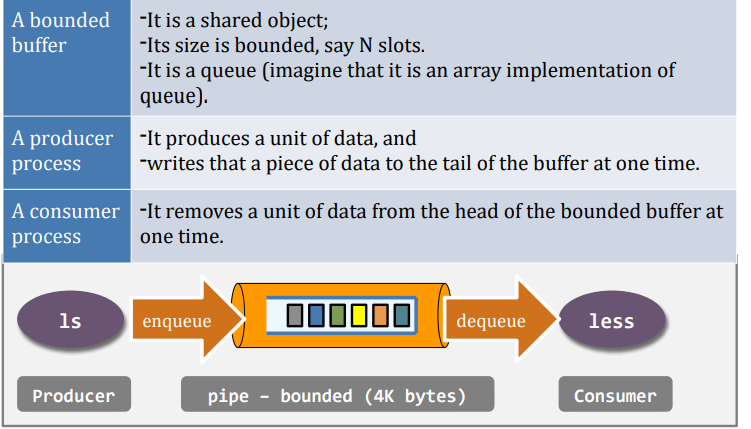

Producer-consumer problem – introduction

- Also known as the bounded-buffer problem.

- Single-object synchronization

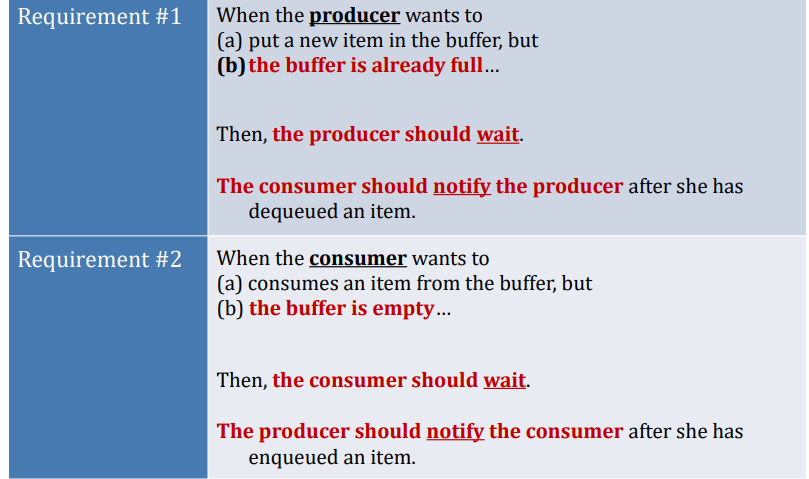

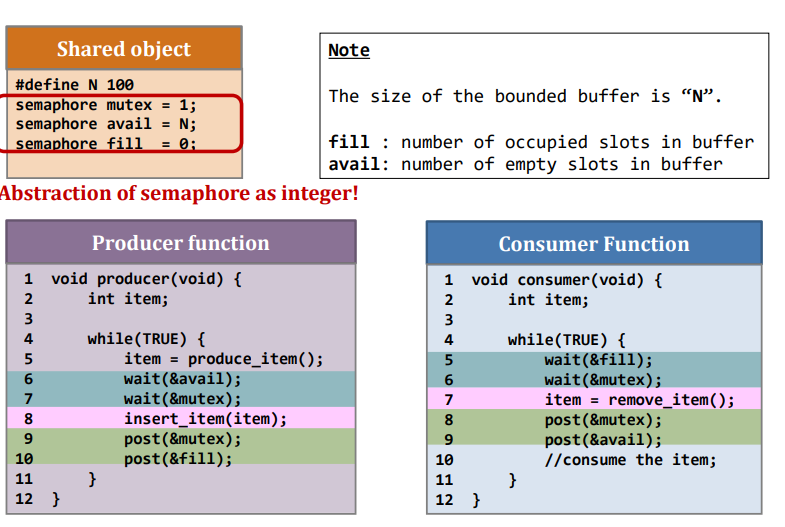

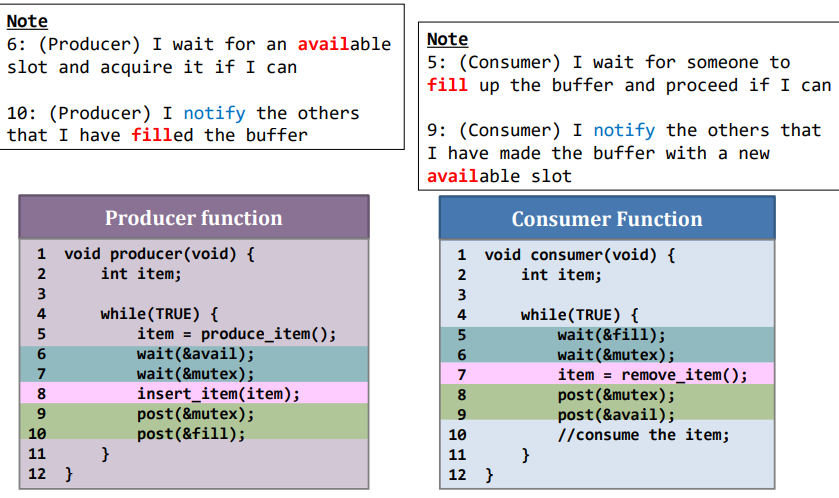

Producer-consumer problem: semaphore

- The problem can be divided into two sub-problems.

- Mutual exclusion

- The buffer is a shared object. MUtual exclusion is needed. Done by one binary semaphore

- Synchronization.

- Because the buffer’s size is bounded, coordination is needed. Done by two semaphores

- Notify the producer to stop producing when the buffer is full

- In other words, notify the producer to produce when the buffer is NOT full

- Notify the consumer to stop eating when the buffer is empty

- In other words, notify the consumer to consume when the buffer is NOT empty

- Notify the producer to stop producing when the buffer is full

- Because the buffer’s size is bounded, coordination is needed. Done by two semaphores

- Mutual exclusion

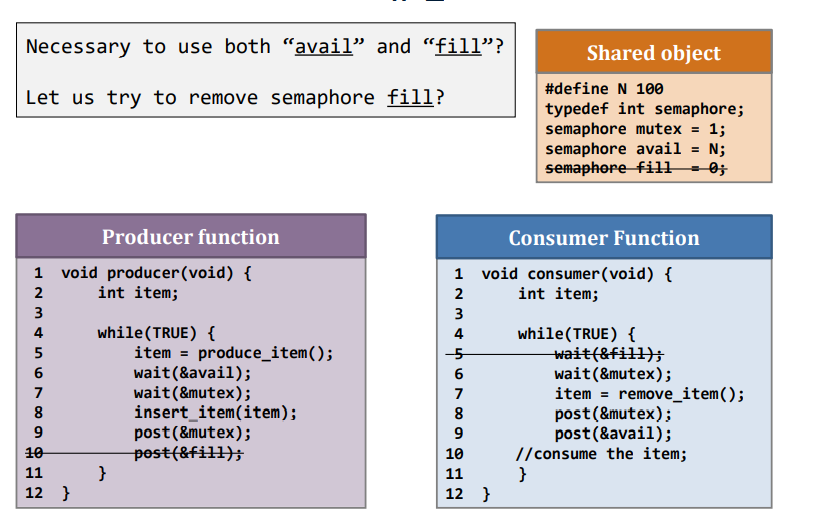

Producer-consumer problem – question #1

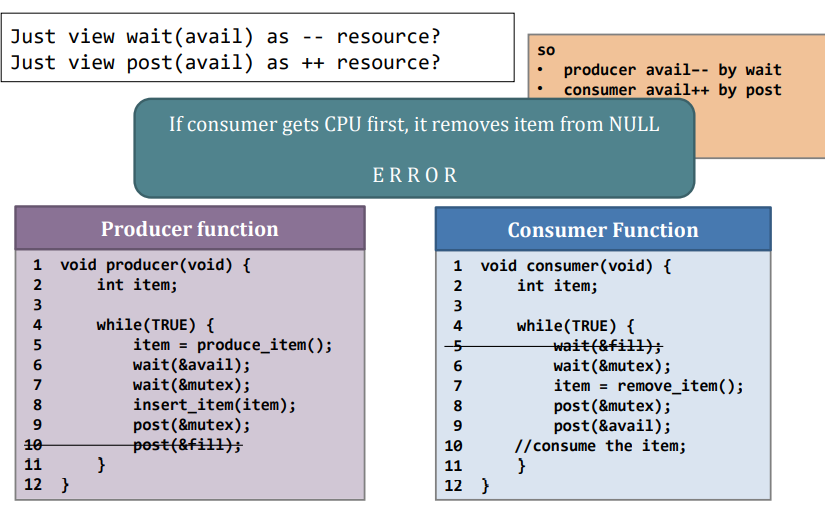

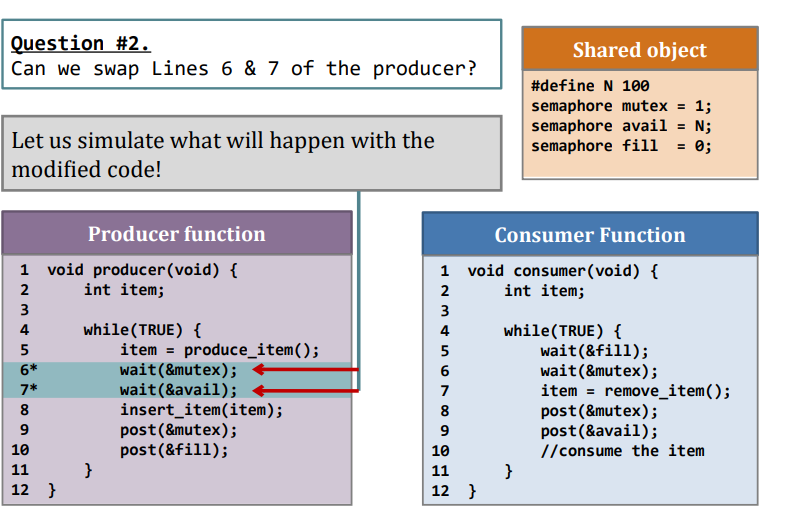

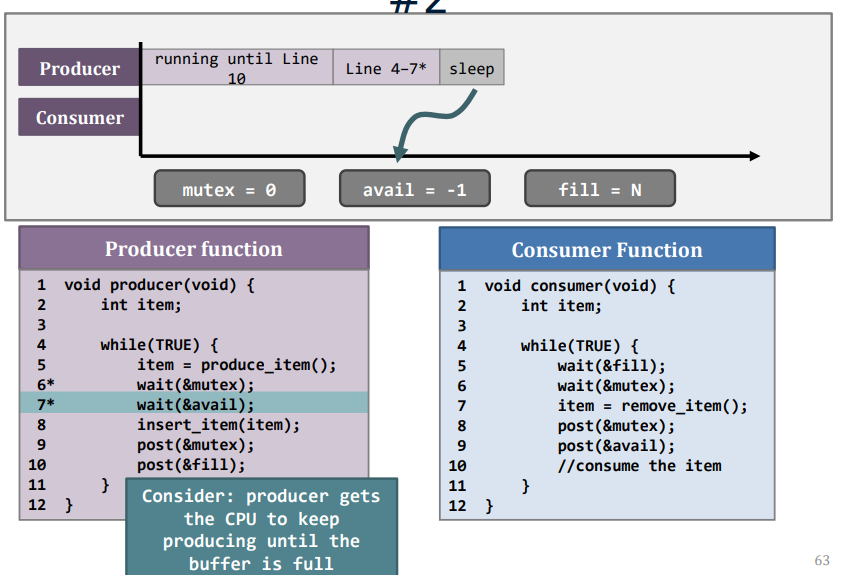

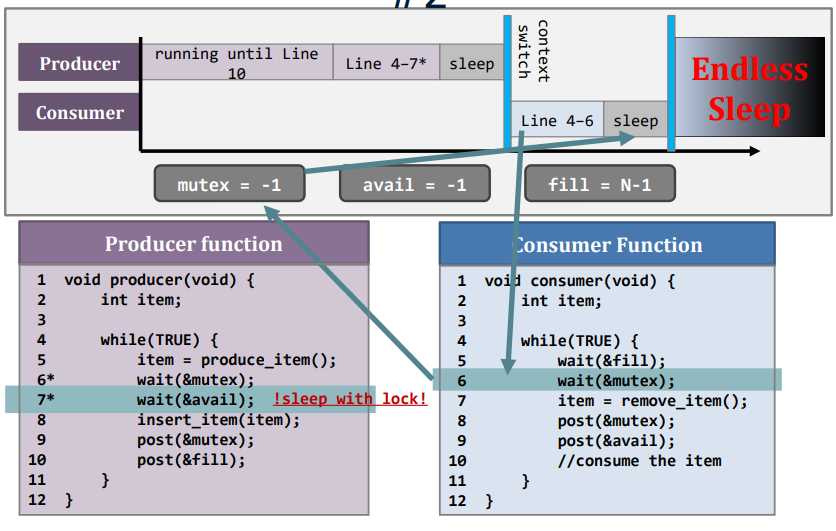

Producer-consumer problem – question #2

- This scenario is called a deadlock

- Consumer waits for Producer’s mutex at line 6

- i.e., it waits for Producer (line 9) to unlock the mutex

- Producer waits for Consumer’s avail at line 7

- i.e., it waits for Consumer (line 9) to release avail

- Consumer waits for Producer’s mutex at line 6

- Implication: careless implementation of the producer-consumer solution can be disastrous(灾难性的).

Deadlock

Summary on producer-consumer problem

- How to avoid race condition on the shared buffer?

- E.g., Use a binary semaphore

- How to achieve synchronization?

- E.g., Use two semaphores: fill and avail

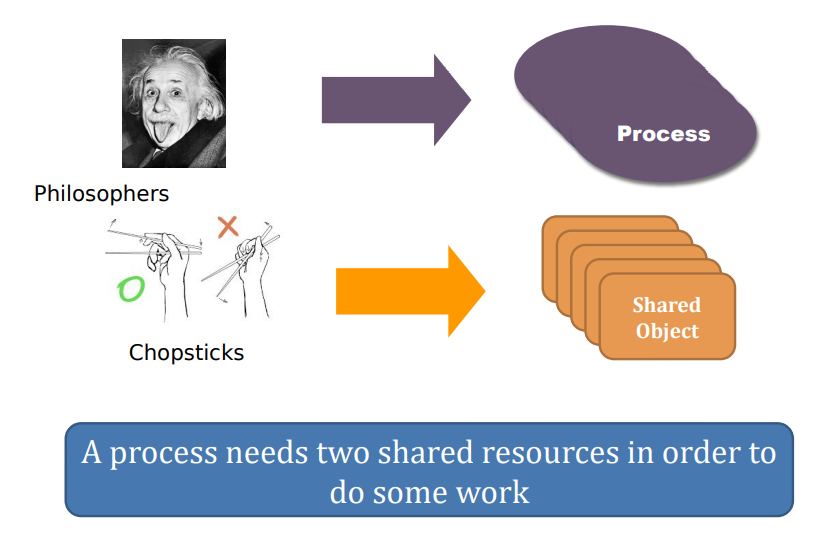

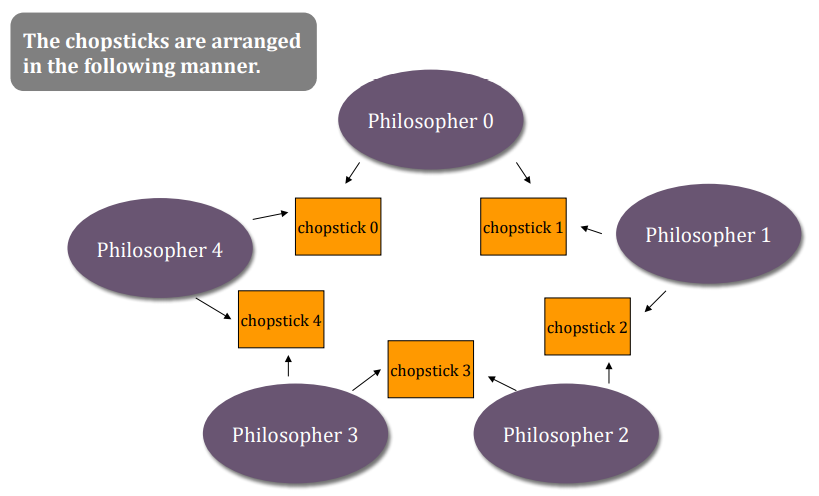

Dining philosopher – introduction

- 5 philosophers, 5 plates of spaghetti, and 5 chopsticks.

- The jobs of each philosopher are to think and to eat +They need exactly two chopsticks in order to eat the spaghetti.

- Question: how to construct a synchronization protocol such that they:

- will not starve to death, and

- will not result in any deadlock scenarios?

- A waits for B’s chopstick

- B waits for C’s chopstick

- C waits for A’s chopstick …

- It’s a multi-object synchronization problem

Dining philosopher - introduction

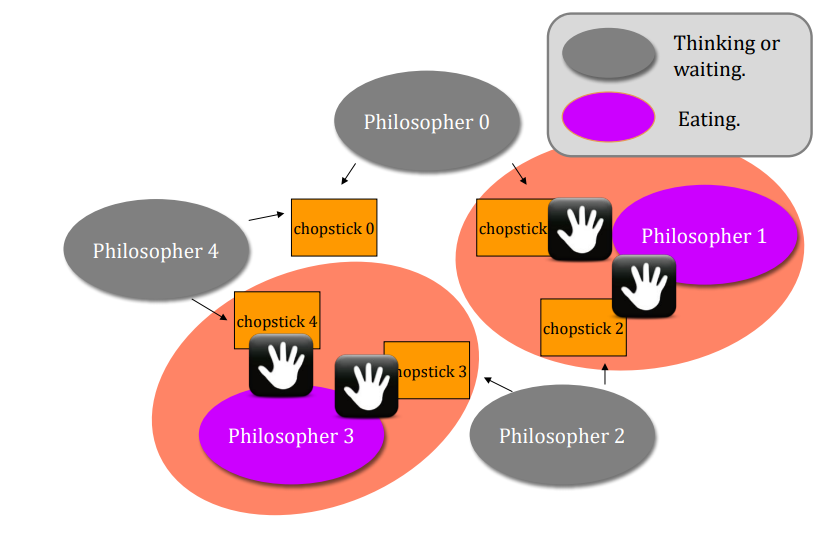

Dining philosopher – requirement #1

- Mutual exclusion

- While you are eating, people cannot steal your chopstick

- Two persons cannot hold the same chopstick

- Let’s propose the following solution

- When you are hungry, you have to check if anyone is using the chopsticks that you need.

- If yes, you wait

- If no, seize both chopsticks

- After eating, put down both your chopsticks.

Dining philosopher – meeting requirement #1?

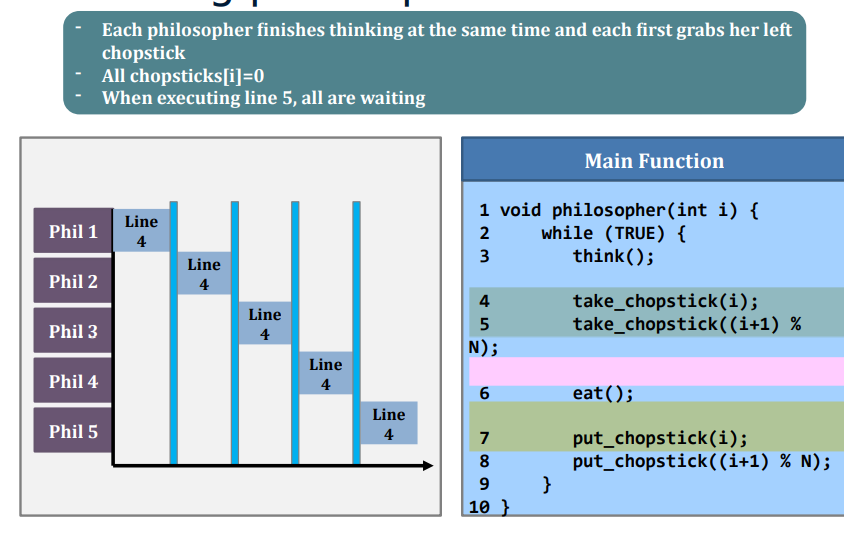

Dining philosopher - deadlock

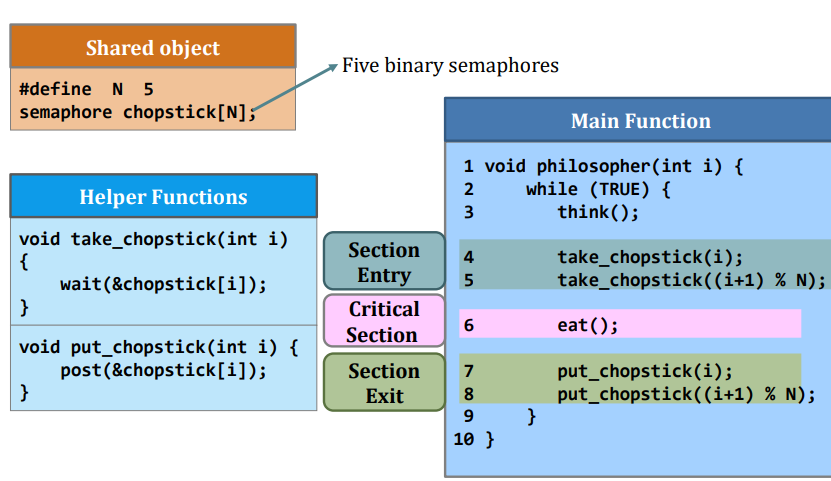

Dining philosopher – requirement #2

- Synchronization

- Should avoid deadlock.

- How about the following suggestions:

- First, a philosopher takes a chopstick.

- If a philosopher finds that she cannot take the second chopstick, then she should put it down.

- Then, the philosopher goes to sleep for a while.

- When wake up, she retries

- Loop until both chopsticks are seized.

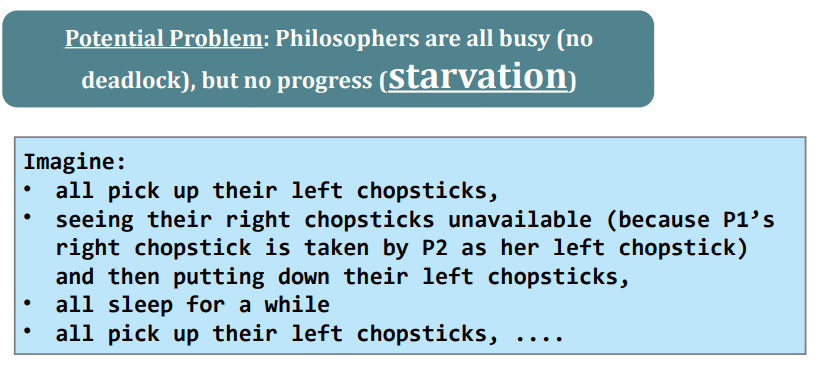

Dining philosopher – meeting requirement #2?

Dining philosopher – before the final solution

Before we present the final solution, let us see what problems we have.

- Problems

- Model each chopstick as a semaphore is intuitive, but may cause deadlock

- Using sleep() to avoid deadlock is effective, yet creating starvation.

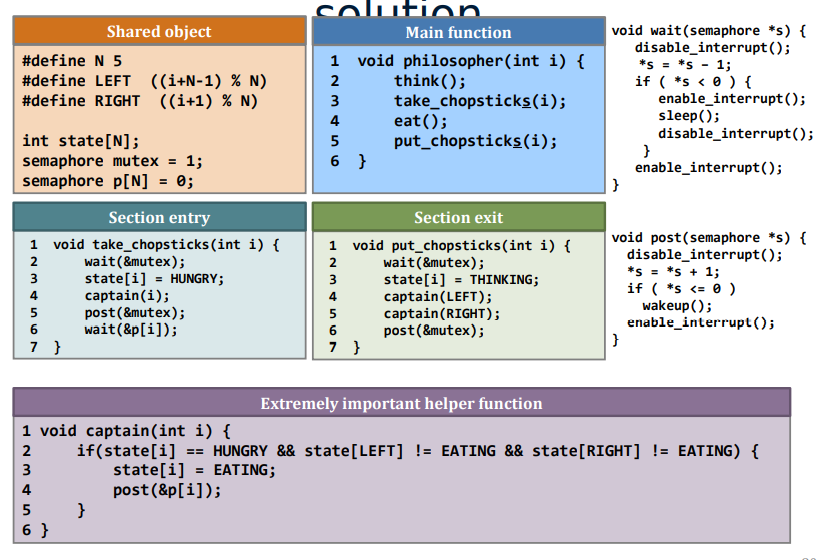

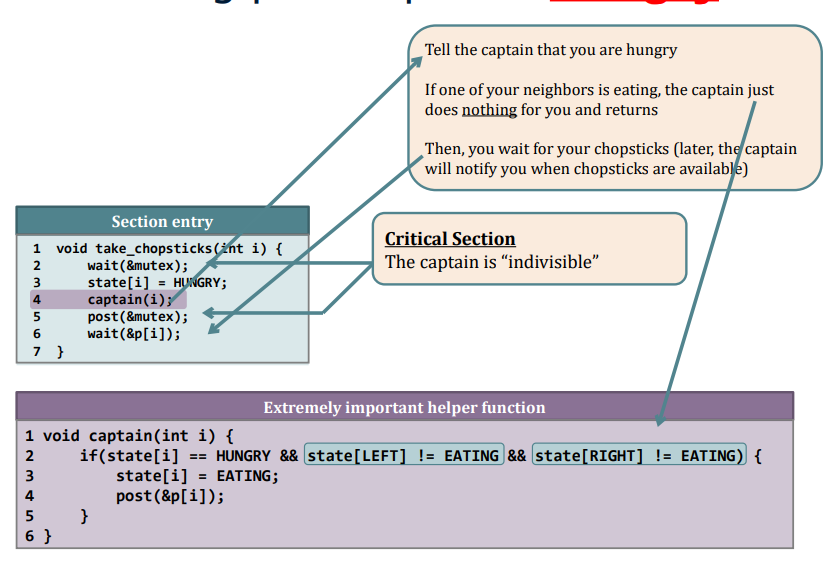

Dining philosopher – the final solution

Dining philosopher – Hungry

Dining philosopher – Finish eating

Dining philosopher – the core

- 5 philosophers -> ideally how many chopsticks?

- how many chopsticks do we have now?

- Very common in today’s cloud computing multi-tenancy model

Summary on IPC problems

- The problems have the following properties in common:

- Multiple number of processes;

- Processes have to be synchronized in order to generate useful output;

- Each resource may be shared as well as limited, and there may be more than one shared processes.

- The synchronization algorithms have the following requirements in common:

- Guarantee mutual exclusion;

- Uphold(维护) the correct synchronization among processes; and

- (must be) Deadlock-free.

Heisenbugs

- Jim Gray, 1998 ACM Turing Award winner, coined that term

- You find your program P has a concurrency bug

- You insert ‘printf’ statements or GDB to debug P

- Then because of those debugging things added, P behaves normally when you are in debug mode